Firstly, I need to clarify that what I am discussing in this blog post is only with ADF Mapping Data Flow instead of the whole ADF service. I am not going to challenge ADF’s role as the superb orchestration service in the Azure data ecosystem. In fact, I love ADF. At the control flow level, it is neat, easy to use, and can be very flexible if you can be a little bit creative and imaginative. To avoid potential confusion, I define the “Hand-Coded Transformations” and the “ADF Mapping Data Flow” in this blog post as:

- Hand-Coded Transformations – Programming transformation logics in code (pyspark, SQL, or others) and orchestrating the execution of the code using ADF data transformation activities in control flow, such as Databricks activities, Stored Procedure activity, and custom activity.

- ADF Mapping Data Flow – Programming transformation logics using ADF Mapping Data Flow visual editor.

I totally understand why people prefer using a GUI-based, ready-to-use, code-free ETL tool to manually crafting data wrangling/transforming code. Intuitively, this is a no-brainer choice for people who view it from 10,000 feet. However, the devil is in the details. Here is the list of reasons why I prefer hand-coded transformation to ADF Mapping Data Flow:

- One of the main drives for using ADF Mapping Data Flow is possibly the simplicity and flat learning curves. This may be true for the simple, generic transformation logic. However, for the more complicated, domain-specific requirements, ADF Mapping Data Flow does not have to be simpler than writing code in SQL or Python. They either require you to create complex data flow logic or you have to embed custom code in the data flow eventually. On the other hand, the hand-coded approach can be much simpler due to the flexibility of custom coding and the huge number of ready-to-use data wrangling/transformation libraries.

- You may argue that the ADF Mapping Data Flow is for people who don’t know coding. However, ADF does not play the role of self-service ETL tools in the Azure data ecosystem (Power Query does). The target users of ADF should be data engineers or DataOps instead of data analysts. I understand some junior data engineers may resisting coding and shy away even without a try. The ADF Mapping Data Flow gives them the opportunity to escape and actually deprives their motivations of learning coding.

- We can implement some validation logics in an ADF Mapping Data Flow, but that would be very high-level and simple. That does not allow us to conduct a comprehensive unit test on the transformation logics.

- One of the main reasons I prefer coding over visual editor is the reusability, including reusability of domain-specific business logics and reusability of domain-independent non-functional logics, such as auditing, QA and exception handling. As the ADF Mapping Data Flow is working in the declarative paradigm, it is difficult to abstract and generalise the logic implemented in ADF Mapping Data Flow. On the other hand, abstraction and generalisation can be easily implemented with custom coding.

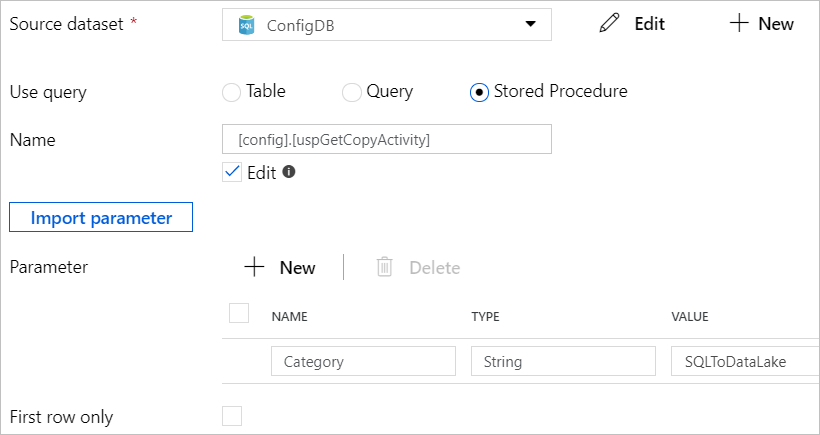

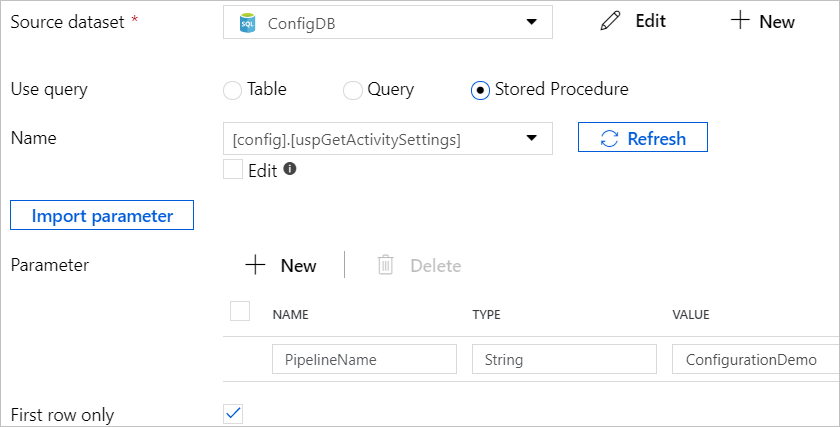

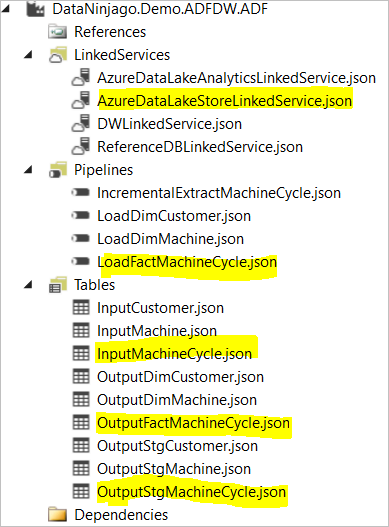

- Custom coding allows you to build configurable and metadata-driven pipelines. A configurable, metadata-driven design can significantly improve the maintainability of your ADF pipelines. One of my previous blog posts describes some of my early designs of metadata-driven ADF pipelines with custom coding in SQL. If you are interested, you can find the blog post here.

- The last but also the most important reason for me to favour hand-coded approach is the control and flexibility I can have to withstand the uncertainty in my projects. Just like any other IT project, it is not realistic to predict and control all the possible situations in a data engineering project. There are always some kinds of surprises popping up or user requirement changes. A code-free tool like ADF Mapping Data Flow hides the implementation under the hood and requires you to work in their way. In case an unpredicted situation happens and the code-free tool cannot handle it, it is expensive to change the tool at a late stage of a project. Compared to a code-free tool, hand-coding give you more flexibility and resilience to handle unpredicted situations.

You must be logged in to post a comment.