*The source code created for this blog post can be found here.

In the previous blog posts in the SSIS in Azure series, we created a SSIS package to periodically ingests data from Azure SQL database to Azure Data Lake Store and deployed the package in the Azure-SSIS Integrated Runtime. Up to this point, we have achieved two goals in the SSIS in Azure series:

- Using SSIS to move data between cloud storages

- Host and run SSIS packages in cloud

The last goal we need to achieve is to schedule the execution of the SSIS package in cloud. Traditionally, we can schedule the SSIS package execution by creating a SQL Server Agent job. However, we can only do that through an on-premises SQL Server instance or provision a SQL Server vm in Azure. Thanks to the support of Stored Procedure activity in ADF, we can now schedule the SSIS package execution using a cloud-based ADF pipeline. This blog post will walk through the steps to achieve that.

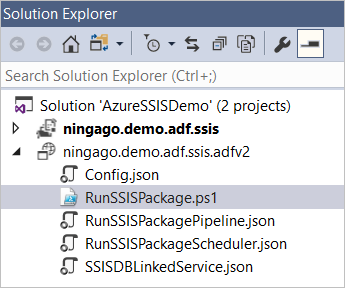

You can find the source code created for this blog post in my Github. The ningago.demo.adf.ssis.adfv2 project contains the json and powershell files to create the ADF pipeline.

The key to this solution is to call the sp_executesql stored procedure in the RunSSISPackagePipeline pipeline that execute the sql script for triggering the SSIS package execution. We can define a Stored Procedure activity in the pipeline to call the sp_executesql stored procedure and pass in the sql script for triggering the SSIS package execution as parameter.

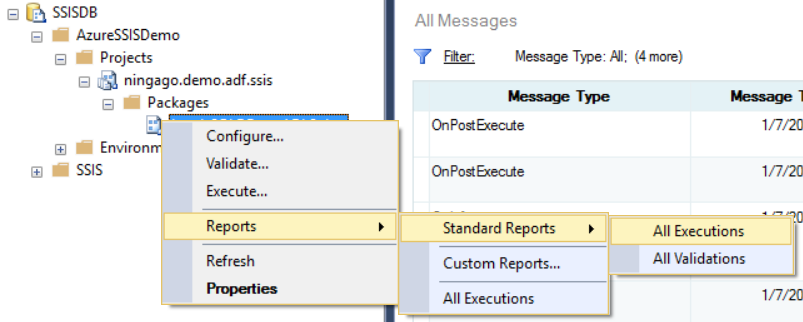

We don’t have to manually author the sql script from scratch, but instead we can generate the script using SSMS. First, we connect to the SSISDB catalog in our Azure-SSIS integrated runtime (please refer to SSIS in Azure #2 for how to do that), select the SSIS project or package we can execute, and click the Execute button to open the Execute Package dialog window.

On the Execute Package dialog window, we select the package to execute and also set a value (can be any value) for the package parameter (the reason is set any value to the parameter is to ensure the parameter setting statement will be generated in the sql script).

We then click the “Script” button on the top-left that will generate the sql script for executing the selected SSIS package.

We can then copy the script into the stored procedure activity defined in the RunSSISPackagePipeline pipeline.

As you may have noticed that the SSIS package parameter, DateScliceToLoad, has been manually set by us with a random value. The next step we need to take is to pass the date of pipeline run into the sql script in order to make the SSIS package only move the data in the give day. Firstly, we need to define the “scheduledRunTime” parameter in the RunSSISPipeline which will receive the scheduled pipeline run time from the pipeline trigger we will create later.

In the sql script we just generated to trigger SSIS package execution, we replace the random date we have manually set with the ADF expression which points to the scheduleRunTime parameter in the pipeline.

Next, we will need to create the trigger json file to schedule the execution of the pipeline. After we specify the pipeline to execute in the trigger file, we need to pass the scheduledTime of the trigger which is a system variable to the scheduledRunTime parameter defined in the pipeline.

{

"properties": {

"name": "RunSSISPackageScheduler",

"type": "ScheduleTrigger",

"typeProperties": {

"recurrence": {

"frequency": "Hour",

"interval": 1,

"startTime": "2018-01-07T00:00:00-22:00"

}

},

"pipelines": [

{

"pipelineReference": {

"type": "PipelineReference",

"referenceName": "RunSSISPackagePipeline"

},

"parameters": {

"scheduledRunTime": "@trigger().scheduledTime"

}

}

]

}

}

After we have created the json files to define linked service, pipeline and the trigger, we need to deploy them into our data factory instance. As the V2 version of ADF does not support deployment through UI yet, we need to create a deployment scripts using PowerShell.

$DataFactoryName = "ninjago3843adf" $ResourceGroupName = "SSIS" Set-AzureRmDataFactoryV2LinkedService -DataFactoryName $DataFactoryName -ResourceGroupName $ResourceGroupName -Name "SSISDBLinkedService" -File ".\SSISDBLinkedService.json" Set-AzureRmDataFactoryV2Pipeline -DataFactoryName $DataFactoryName -ResourceGroupName $ResourceGroupName -Name "RunSSISPackagePipeline" -DefinitionFile ".\RunSSISPackagePipeline.json" Stop-AzureRmDataFactoryV2Trigger -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -Name "RunSSISPackageScheduler" Set-AzureRmDataFactoryV2Trigger -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -Name "RunSSISPackageScheduler" -DefinitionFile ".\RunSSISPackageScheduler.json" Start-AzureRmDataFactoryV2Trigger -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -Name "RunSSISPackageScheduler"

After the pipeline is deployed and scheduled by the trigger, the pipeline will execute our SSIS package based on the schedule.

You must be logged in to post a comment.