In one of my previous blog post, I described an approach to load text files from Azure Data Lake Store to Power BI filtered by the date period specified by users through setting the period start and period end parameters. That approach is capable to load text files located at the same folder. However, there is another common folder structure pattern that is often used to organise the files in Azure Data Lake Store, i.e. the Year-Month-Day folder hierarchy, such as:

It raises a challenge when users want to load files between two dates into Power BI. Firstly, the Power Query query we created needs to be able to load files from different folders. In addition, the query should be able to conditionally load the files based on the data period specified by users through filtering the Year-Month-Day folder hierarchy. This blog post describes how to create Power Query queries for this type of requirements with the following steps.

It raises a challenge when users want to load files between two dates into Power BI. Firstly, the Power Query query we created needs to be able to load files from different folders. In addition, the query should be able to conditionally load the files based on the data period specified by users through filtering the Year-Month-Day folder hierarchy. This blog post describes how to create Power Query queries for this type of requirements with the following steps.

- Step 1 – Define the StartDate parameter and EndDate parameter for users to specify the date period

- Step 2 – Define a custom function to load the metadata of all the files stored in a folder. The custom function takes folder path as argument and returns a table value which stores the file metadata

- Step 3 – Generate the sequence of dates between the specified StartDate and EndDate

- Step 4 – Derive the folder path from the generated date

- Step 5 – Invoke the custom function created at step 2 and expand the returned table field to get the list of files we aim to extract

- Step 6 – Combine the files and convert to table format

Step 1 – Define parameters

Firstly, we define two Date type of parameters, StartDate and EndDate.

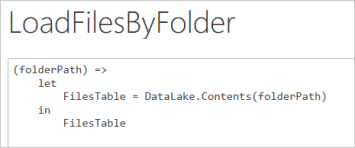

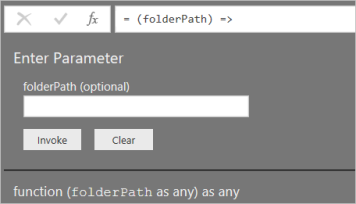

Step 2 – Define custom function

We then define a custom function that takes the path of a folder as argument and return a table value with the metadata of all files stored at that folder.

Step 3 – Generate date list

We then need to create the query to extract the files from the Year-Month-Day folder hierarchy filtered by the StartDate and EndDate parameters. Firstly, we generate the sequence of dates between the StartDate and the EndDate using built-in List.Dates function:

Step 4 – Derive the folder path

We then convert the dates list into a table and add the “DatePathPart” column that generate the Year/Month/Day part of the folder path such as “2008/06/09”. We then add the “FolderPath” column that makes the full folder path through concatenating the root folder path and the Year/Month/Day part.

After this step, we should have a table like this:

Step 5 – Invoke the custom function and expand returned table

We can then invoke the custom function defined at step 2 that will add a table type column. Once we expand the table, we will have the list of all the files we want to query based on the specified StartDate and EndDate parameters.

This is the full piece of code for this query:

| let | |

| #"Filtered Dates" = List.Dates(StartDate, Duration.Days(EndDate - StartDate)+1, #duration(1, 0, 0, 0)), | |

| #"Dates Table"= Table.FromList(#"Filtered Dates", Splitter.SplitByNothing(), {"Date"}, null, ExtraValues.Error), | |

| #"Extract Date Path" = Table.AddColumn(#"Dates Table", "DatePathPart",each Text.From(Date.Year([Date])) & "/" | |

| & Text.PadStart(Text.From(Date.Month([Date])),2,"0") & "/" | |

| & Text.PadStart(Text.From(Date.Day([Date])),2,"0")), | |

| #"Add Root Path" = Table.AddColumn(#"Extract Date Path", "RootPath", | |

| each Text.From("adl://ninjagodem0123.azuredatalakestore.net/Samples/Demo/")), | |

| #"Add Folde Path" = Table.AddColumn(#"Add Root Path", "FolderPath", each [RootPath] & [DatePathPart]), | |

| #"Invoked Custom Function" = Table.AddColumn(#"Add Folde Path", "Files", each LoadFilesByFolder(Text.From([FolderPath]))), | |

| #"Expanded Files" = Table.ExpandTableColumn(#"Invoked Custom Function", "Files", | |

| {"Content", "Name", "Extension", "Date accessed", "Date modified", "Date created", "Attributes", "Folder Path"}, | |

| {"Files.Content", "Files.Name", "Files.Extension", "Files.Date accessed", | |

| "Files.Date modified", "Files.Date created", "Files.Attributes", "Files.Folder Path"}) | |

| in | |

| #"Expanded Files" |

Step 6 – Combine the files

The last step is to combine the list of files we queried at step 1 – 5, extract the data records from the binary csv format and load into a single table for downstream process.

You must be logged in to post a comment.