*The source code created for this blog post is located here.

The low cost, schema-less and large column attributes of Azure Data Lake Store along with the large number of supported analytic engines (e.g., Azure Data Lake Analytics, Hive and Spark) makes it a prefect store-everything repository for enterprise data. We can offline the copies of business data from various LOB data sources into Azure Data Lake Store for all sorts of batch analysis.

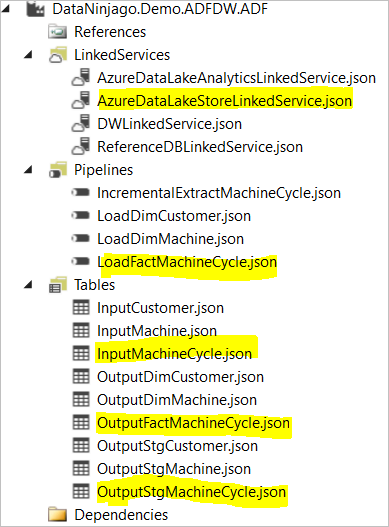

Microsoft provides us with Azure Data Factory, the cloud-based ETL service in Azure Cortana Intelligence Suite, to support the data ingestion to Azure Data Lake Store. However, many data engineers working with Microsoft BI stack may prefer to use the SSIS, the tool they are familiar with and offers the easy-to-use visual editor and the rich collection of transformation components, instead of Azure Data Factory where they have to author the json files to define data source links, datasets, and pipelines (at least for Azure Data Factory V1. There will be a visual editor for Azure Data Factory V2 but not available yet).

Thanks to the Azure-SSIS integration runtime that is available for public preview in Azure Data Factory V2, we can now deploy and execute our SSIS packages in Azure that provides an alternative option for cloud-based ETL.

This blog post introduces how to move data in cloud using SSIS with an example for a common use case that periodically ingest data from SQL database to Azure Data Lake Store. There are two key requirements for this use case:

- SSIS need to be able to connect to Azure SQL database and load the data into a csv file in a specified folder in Azure Data Lake Store

- SSIS need to be able to periodically, incrementally load data from Azure SQL database into a csv file for that period. The csv files need to be organised in date hierarchy for optimised performance of Azure Data Lake Store.

For the first requirement, we need to use the SSIS Feature Pack for Azure that is an SSIS extension to connect to Azure services, move data between Azure data sources or between on-premises data sources and Azure data sources. For the second requirement, we need to use a SSIS trick for dynamic attribute settings on data flow destination component. We will cover the details to fulfil those two requirements in the rest of the blog post.

Firstly, we need to install the SSIS Feature Pack for Azure to Visual Studio (the right version of SSDT should have been installed to the Visual Studio). We should be able to see the Azure connection components in the SSIS toolbox after the feature pack is installed.

Before starting to build the SSIS package, we need to create a Azure AD service principle as the service account for accessing the Azure Data Lake Store and assign the principle read/write permission to the folder in the Azure Data Lake Store where the output csv files will be stored.

We then create a SSIS project in SSDT and add a Data Flow Task.

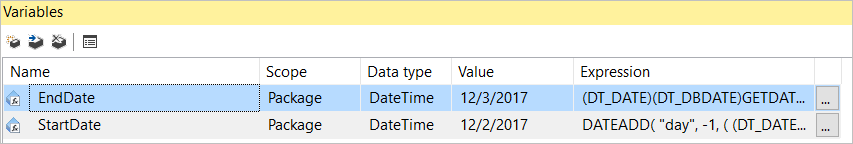

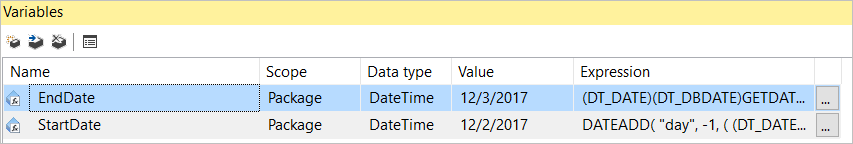

Open the Data Flow tab, add an ADO NET source which will connect to the Azure SQL database where the data will be extracted from. In this example, we will use the AdventureWorks sample database as data source and transfer the sale orders data into Azure Data Lake Store. To extract the sale orders periodically, we first define two variables in the SSIS package, “StartDate” and “EndDate“. In this example, we want to load the sale orders at the daily interval. The SSIS package is expected to run at early morning every day to load data of the previous day. Therefore, the value of StartDate variable will be: DATEADD( “day”, -1, ( (DT_DATE)(DT_DBDATE)GETDATE())) and the value of EndDate will be: (DT_DATE)(DT_DBDATE)GETDATE().

Then we want to extract the sale order records with LastModified datatime between the StartDate and the EndDate. In this example, we first create a Stored Procedure uspGetSaleOrders in the source SQL Database that take the StartDate and EndDate as parameters and return the sale orders between the dates. In your environment, if you do not have access to create Stored Procedure in your data sources, you can create the sql scripts into a SSIS variable.

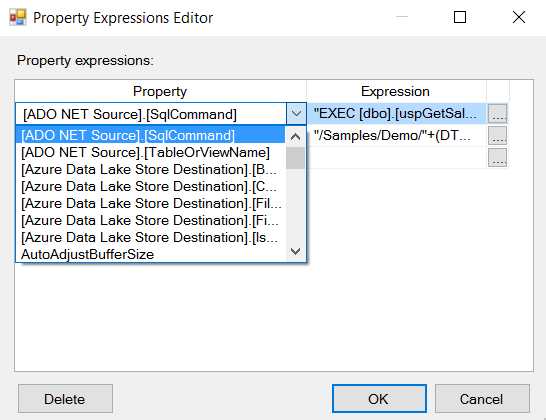

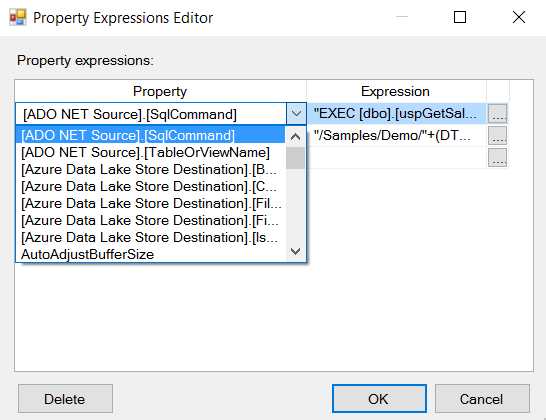

We then move to the Control Flow tab and open the properties panel of the data flow task and open the Expressions editor.

On the Expressions editor, we add an expression to dynamically set the SqlCommand property of the SQL database source as: “EXEC [dbo].[uspGetSaleOrders] @StartDate ='”+(DT_WSTR, 50)@[User::StartDate]+”‘, @EndDate = ‘”+(DT_WSTR, 50)@[User::EndDate]+”‘”. This command will exec the stored procedure we created earlier with the StartDate and EndDate variables passed in.

Now we have the data source setup and we can move to add and configure the Azure Data Lake Store Destination.

We add an Azure Data Lake Store Destination component in the Data Flow table and add a data flow from the SQL database source to the destination. On the Azure Data Lake store Destination Editor window, we need to create an connection manager to manage the connection (including the store location and the authentication) to the Azure Data Lake Store and specify the output file path and the format of the file. As we will output the file as csv format, we need to select the file format as Text and the column delimiter character as “,”.

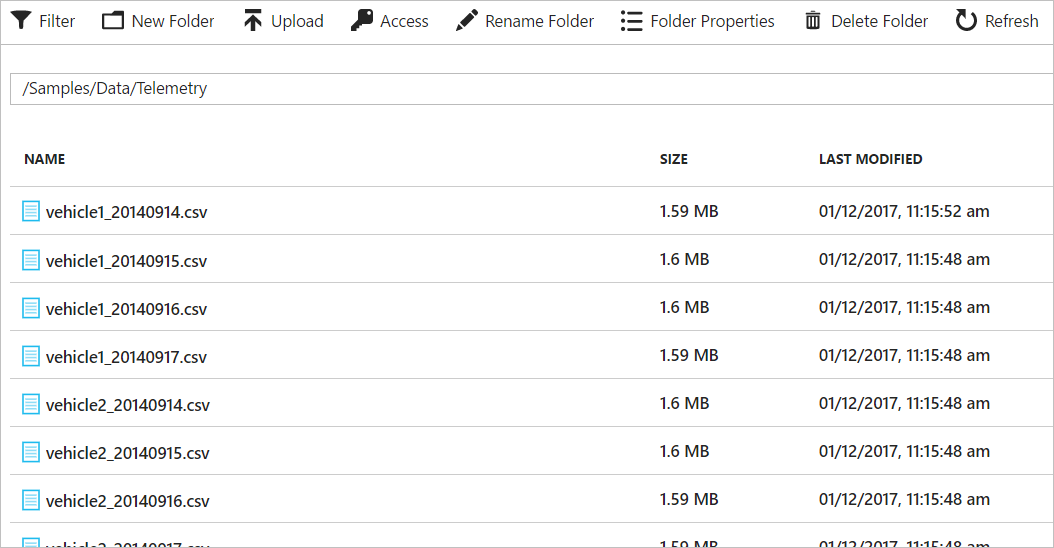

The interesting part is on the File Path attribute. As we discussed earlier, we want to organise the files into the date hierarchy based on the modified date of the sale order records, so the file path will look like: “/{project folder}/{Year}/{Month}/{Day}/SaleOrders_{date string}.csv“.

To dynamically set the file path of Azure Data Lake Destination, we can add an expression in the parent Data Flow Task as we did for the SQLCommond attribute of the SQL database source.

We define the expression for the file path as:

“/Samples/Demo/”+(DT_WSTR, 4)YEAR(@[User::EndDate]) +”/”+RIGHT(“0” + (DT_WSTR, 2) MONTH(@[User::EndDate]), 2) +”/”+RIGHT(“0″ + (DT_WSTR, 2) DAY(@[User::EndDate]), 2)+”/SaleOrders_” +(DT_WSTR, 4)YEAR(@[User::EndDate]) + RIGHT(“0” + (DT_WSTR, 2) MONTH(@[User::EndDate]), 2) + RIGHT(“0″ + (DT_WSTR, 2) DAY(@[User::EndDate]), 2)+”.csv”

Now we have the Azure Data Lake Store Destination setup and the data flow is ready to run. We can test the data flow in SSDT. As the sample AdventureWork database does not contain sale order records in the period when the blog post is written. I manually set the StartDate and EndDate variables for a day when there are sale order records in the AdventureWork database for the test purpose.

Now we can see the data flow is working when running on our local machine through SSDT. The next blog post will provision the Azure-SSIS Integration Runtime and deploy and run the SSIS package in the cloud.

It raises a challenge when users want to load files between two dates into Power BI. Firstly, the Power Query query we created needs to be able to load files from different folders. In addition, the query should be able to conditionally load the files based on the data period specified by users through filtering the Year-Month-Day folder hierarchy. This blog post describes how to create Power Query queries for this type of requirements with the following steps.

It raises a challenge when users want to load files between two dates into Power BI. Firstly, the Power Query query we created needs to be able to load files from different folders. In addition, the query should be able to conditionally load the files based on the data period specified by users through filtering the Year-Month-Day folder hierarchy. This blog post describes how to create Power Query queries for this type of requirements with the following steps.

You must be logged in to post a comment.